Webhooks that we now know of have been around already for more than 10 years. In 2007 Jeff Lindsay who at the time was working at NASA as a Web Systems Architect started bringing webhook ideology into the light. Although it was only after couple of years that its popularity started really gaining traction. Github has used webhooks since late 2010 but acknowledged the full potential of webhooks only at the beginning of 2014 and made it as one of the primary features for the public. They started to offer more configuration, customization and debugging options for anyone using webhooks. Since then it has become a buzzword in the developer’s world. But let’s quickly go over what it really is.

The way Jeff Lindsay describes it in his first blog post about the next big thing is: “Web hooks are essentially user defined callbacks made with HTTP POST. To support web hooks, you allow the user to specify a URL where your application will post to and on what events. Now your application is pushing data out wherever your users want. It’s pretty much like re-routing STDOUT on the command line.”

So it’s made clear that it is a tool or process that empowers users to receive important data whenever something happens on the web service side. It means that no polling on status change and long waits on synchronous HTTP requests is required. Service will just use the URL that was provided by the user to notify and send along the appropriate data.

Technically to be able to provide webhooks to users is as simple as adding functionality to send certain data on certain events to a dynamic URL. However on user’s side there has to be an HTTP server that can receive the data to the provided URL endpoint. This webhooks functionality has helped to provide server to server communication in a lot of automation scenarios across many services.

How to test webhooks?

Now that almost every service out there uses webhooks and grants their users assurance that their service runs smoothly it is essential to be able to test it. As I mentioned before from user’s perspective there is an HTTP server that has to deal with webhook callbacks. It means that this is also a must to be able to test webhooks during the release cycles. Let’s go over a webhook feature process sequence graph.

In the example above a user is requesting a long running task creation to which the service will return a callback whenever the task has been done running. The callback response usually contains job status information and other data based on creation request.

To be able to create a webhook for a certain type of task notifications tester will need a real web server to receive the callback to in the first place. So this is the first dependency you will require for webhooks testing. Next step for the tester is to request the test service for Type A task creation and receive a successful response status that the creation is initiated. After test service is done with creation it should send the callback to webhook URL. This is where tester needs to ascertain the data that is received. Additionally performance should be thought of as well. The time it takes for the task to be created and reported back. Especially when real time notifications are in place.

This testing process becomes a little more complicated when there is a requirement for automation. For regression test coverage it most certainly will be the case. This bares additional requirements of linking the callback server (user side) and the test framework for callback response analysis. And what if performance data gathered from one user test scenario is not enough? This is where it becomes interesting.

How to load test webhooks?

We have gone through testing from one user’s perspective where you as a tester have to establish an HTTP service to be able to receive and probably be able to send further the callback response. The problem with multiple users is that tester has to make sure to map callback responses to the initial task creation requests. The isolation of a single webhook data flow from tens or even thousands more has to be made. In the real world it is simple as each user deals with their own. Now as a testing scenario this requires a lot more logic for handling the callback responses.

If a tester manages each user in a separate process thread for concurrent requests then it means that there has to be also a separate process that handles webhook callback response data distribution to these threads. Remember that performance data has to be gathered as well so the time of task creation that triggers callback response and time when the callback has been received has to be clocked. Essentially functional assertions have to be made to the data that came back. Tester has to make sure it correlates the initial request.

So if you are already using load test framework for your service but still haven’t got webhook service load tested it may have been the time to start looking into if the framework allows such feature. If not you may be spending time writing the service that will create dynamic value URLs to be able to map callback responses to individual task creation request events. There are ways to handle that but let me tell you how we do it with Apimation.

How do we load test webhooks with Apimation?

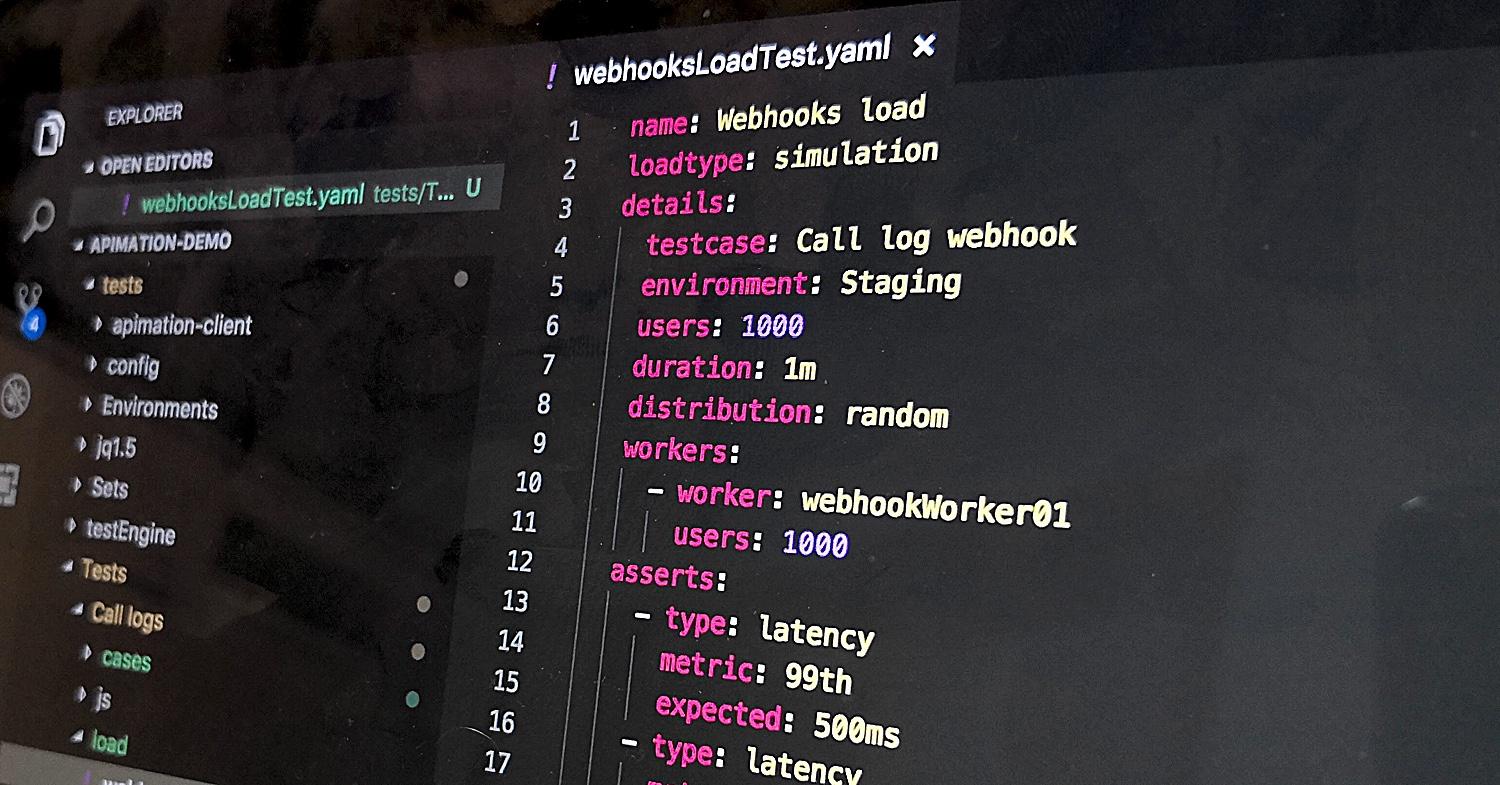

Apimation is an API test automation tool. It allows to test any kind of web service based on HTTP communication protocol. We made sure that Apimation allows the most advanced features without any code writing. It is using YAML test definition files to determine what and how is needed to be tested. So as a tester you define everything in human readable YAML format. All of Apimation test features are generic so it can be applied to any web API testing.

As we also acknowledge the power and need of webhooks Apimation offers a built in webhooks testing feature. It means that all of the complicated mapping and callback routing is done for the tester. I will go over this feature in terms of how it works from user’s perspective and how we made it happen.

Let’s start by going through what tester needs to do to make it work in apimation.

- Define the step in YAML for making a request to create a webhook for certain long running task. Specify the method, url, headers and body, add a response status assertion. For the webhook callback url just provide an apimation function call: $callbackServiceURL(callbackType)

- Define the long running task creation request step in YAML: also specify the method, url, headers and body, add a response status assertion and most importantly add callbackTriggerID and callbackMaxDuration properties (callbackTriggerID should match the callbackType value tester put as the callbackServiceURL function call argument)

- Define the last step as type callback and put assertions on callback time, callback response or header data (all of this built in). Tester can extract data as well to provide it in the next step if needed (additional user flow).

- Now tester creates a test case definition yaml file and selects the steps in same sequence as above: step 1: create the webhook with generated url; step 2: task creation with callback trigger data; step 3: Receive callback and assert values and latency

- When test case is defined it needs to be provided in load test scenario yaml file definition. Set the test case name, user amount and duration, add performance assertions if needed (this will make sure that overall latency on response times is acceptable).

NB! Note that when executing the load test all requests are made from a test agent that can be deployed behind a firewall or even in a local environment.

So in 5 easy steps load test scenario is ready for testing any web service with webhooks. It’s time for explanation about what we have under the hood and how me make it happen. For that purpose below I have created a chart explaining test engine architecture.

Let’s follow through the flowchart. Blue arrows are there to show the data flow of YAML test file synchronization with Apimation database which later is going to be used for test execution:

- CLI client is used to parse YAML data and send JSON data to Data management API to create and update test assets (test steps, test cases, test sets, test environments) (1.1 and 1.2)

- Data management API writes everything to the database cluster (1.3)

When the test asset synchronization is over it is now possible to use CLI client to execute the test. The test flow is marked with green arrows and the steps are as follows:

- When CLI client receives the command for test execution the test request is sent to Test Engine Server (2.1 and 2.2)

- The test request is created when all of the UUID which correspond to all test cases that will be used in the test values are fetched from the database (2.3)

- The test request is then transformed into JSON test request message and fed into the messaging broker data channel based on the test request type (in this case load-test) (2.4)

- Test Engine Master (TEM) is listening to Message broker queues and consumes the corresponding test request message (2.5)

- Based on all the UUID values in the test request TEM fetches test data (2.6)

- Test data is then used to create jobs and those are distributed to Test Engine Worker (TEW) (2.7)

- In the case of webhooks test first request will be sent to create the webhook providing the URL that is generated by the TEW (it includes the worker UUID and callback ID). (2.8)

- Next request will be sent to trigger the webhook callback (long running task creation request to TEST API service) and what happens behind the scenes in TEW is that callback timer is initiated with the value of callbackMaxDuration that user set in YAML step definition file. When the timer runs out a timeout error is sent to the callback channel that is set to be read by the user thread on callback response step. TEW also saves the callback trigger time based on the callback ID. (2.8)

- When long running task creation on TEST API service side is done it will send the callback to the URL that was generated by TEW. That callback request will be received first by Callback routing service which will route the request to TEM. (2.9)

- TEM will forward callback data through a websocket back to TEW. TEW then writes the data to a channel in a map with callback ID key (2.10)

- TEW on the last step in test case execution will stop the callback response timer and clock the time of callback (based on Callback routing service time), it will also execute all assertions against callback data and append to test case results object (in scope of one user) (2.11)

- TEW will close all active channels and subthreads when all results are gathered and will send it all back to TEM. TEM will save the results to DB which will be available to TES whenever CLI client requests them.

In a user simulation load test scenario every simulated user goes through steps 7 to 11 (2.8 to 2.11).

As you can see load testing webhooks is not an easy thing but totally doable. And we need to remember that at the same time for the tester it has to be as straightforward and with as little steps as possible. If you want to jump into testing webhooks right away I recommend to contact us and we will get you going with Apimation. Otherwise if you are determined to create webhook load testing solution on your own we are always open to a friendly conversation and support!

References:

- https://trends.google.com/trends/explore?date=all&q=webhook

- https://github.com/blog/1778-webhooks-level-up

- http://progrium.com/blog/2007/05/03/web-hooks-to-revolutionize-the-web/

Useful links: